| T O P I C R E V I E W |

| ullix |

Posted - 12/24/2020 : 02:23:50

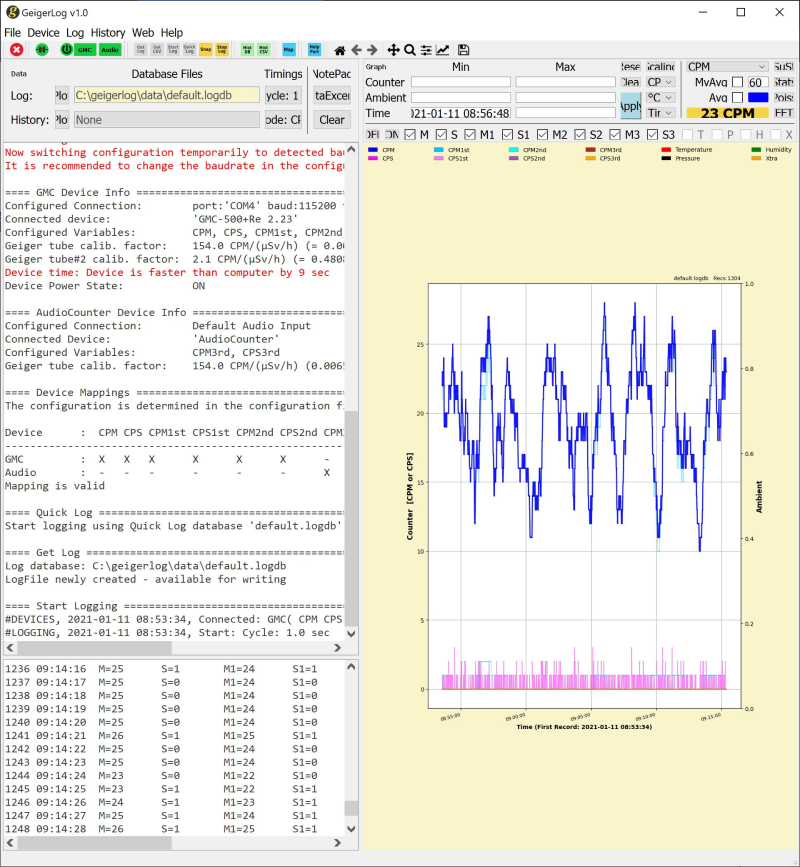

I got my hands on a brand-new counter, with version: 'GMC-500+Re 2.24'. This is one of the 2-tube counters.

First test run got me a big surprise, and not a nice one!

GeigerLog recognized the counter correctly, and set its defaults to measure 3 pairs of values from the counter:

- CPM/CPS: the sum of counts of both tubes (non-sensical, as has been discussed at length already. I will not show these data)

- CPM1st/CPS1st: the counts of the first tube, the "high sensitivity" one

- CPM2nd/CPS2nd: the counts of the second tube, the "low sensitivity" one

I did a first run with the result shown in the pic:

At first glance it looks ok, except that the scatter seems to be a bit wide. Printing the Summary statistics (Button 'SuSt' in GeigerLog). shows the CPM1st average as 770, while the variance is at 13600. Wow! the two should be similar, this is a huge difference!

Strangely, the CPs1st got 12.75 and 13.01, resp., truly kind of similar, as it should be.

This is begging for a Poisson test, and here is the result:

Oh dear.

The CPS data look proper: r2=0.999 - surely close enough to a perfect 1.0 - and average 12.75 almost the same as 13.01. It doesn't get much better.

However, the CPM data are a complete mess. While the average of 770.59 is in line with CPS=12.75*60=765, everything else is complete BS.

This is the worst I have ever seen in any counter, be it a GQ counter or any other, including low-cost counters. This GMC500+ is unusable as it stands!

Since the CPS data - so far - look correct, it seems that a major, major bug has been introduced into the firmware, when it comes to calculating CPM.

What I also find strange is that the clock deviates by more than 20 sec over only a 12 hour period. This is as bad as or even worse than the old GMC300 counters, but this counter supposedly has a real time clock built in, which should be much more precise. Or have they not? Are the two problems related?

I wonder if anyone else has observed this weird effect on their counter? While this is firmware 2.24 on a GMC500+, I guess that the 2.XX firmwares will be similar on all counters. Measure anything and make a Poisson test.

|

| 45 L A T E S T R E P L I E S (Newest First) |

| EmfDev |

Posted - 02/27/2025 : 12:52:35

Fast estimate setting is on the Main Menu -> User -> Settings. For low radiation level, fast estimate does not matter, if you are checking for random stuff around, dynamic is best. |

| Tom Johnson |

Posted - 02/23/2025 : 01:30:57

Cross reference to this discussion - https://gqelectronicsllc.com/forum/topic.asp?TOPIC_ID=10468#11745 .

EmfDev - does your Reply#1 to 800 discussion carry over to 500+ regarding CPM data ???

What is Menu path to Dynamic Fast Estimate for Calculating CPM which you say is Defaulted ON but you recommend changing it to OFF ??? |

| Damien68 |

Posted - 01/15/2021 : 09:11:00

@ullix, as they say in French, I played devil's advocate, but your last data convinced me. I don't know what can be demanded for this devices, but what is certain is that the enormity of the last measures should be easily avoided. |

| ullix |

Posted - 01/15/2021 : 08:53:12

quote:

Can I ask why you are using the 2nd tube to illustrate this?

Gosh, how many times have I already shown the distortions of data coming from 1st tube readings? And people still argue that it's kinda, sorta, still ok. No, it isn't.

Now I show a truly gross example from the 2nd tube.

The source is basically my collection of Th gas mantle and some U minerals; just to get high counts.

|

| Damien68 |

Posted - 01/15/2021 : 07:51:19

quote:

Originally posted by WigglePig

Having considered a little more about where this "base value" of 5 comes from, I believe it is likely to be a rounding error in the calculation of the CPM using the FET approach...I wonder if using different FET times might lead to different rounding errors and therefore a different "base value?" In this case, with a FET longer than 3 seconds we would likely see a REDUCED "base value" if this were the case.

we can not really know where the 5 comes from but what is huge is the normal level of the output which is ~15 CPM in Dynamic mode and ~2.5-3 CPM in 60 seconds mode |

| Damien68 |

Posted - 01/15/2021 : 07:15:16

quote:

Originally posted by WigglePig

Second, the use of a rolling calculation of CPM "over the past 60 seconds" where reporting one figure *each minute* would be a more reliable approach.

In fact we can use a sliding window, and this even while remaining in conformity with the theory of Poisson distribution, that also surprised me but after checking it works.

this enriches the histogram by providing additional relevant "crossed" data.

if each events was registered many time, each start and stop time was differents and give a different representation, it's like looking at same thing from several angles.

it's not necessarily stupid and it is sure that we will add informations.

|

| WigglePig |

Posted - 01/15/2021 : 06:56:16

quote:

Originally posted by ullix

I ran the GMC-500+ overnight, and here I show only the output of the counter for the 2nd, the low-sensitivity tube.

Again, these are values as they are produced by the counter firmware and read by the computer. They are NOT calculated by GeigerLog.

The left half is with a "Fast Estimate Time" (FET) setting of 60 seconds - meaning that FET is switched off - and the right half with FET set to the magic "Dynamic" setting.

Does anyone still claim that the two settings give identical results?

Can I ask why you are using the 2nd tube to illustrate this? Is it because, with lower CPS values you would expect to see wild swings in the estimated CPM whereas the 1st tube with it's higher sensitivity and higher background counts will mask some of this? |

| WigglePig |

Posted - 01/15/2021 : 06:35:47

quote:

Originally posted by Damien68

I wish could say it's the same, but I have dificulty.

F*CK! it looks like a bug

where is the good, certainly the left? or what is the radiation exposition level?

20CPM for tube 2 it would be wise to leave

and there is still a floor at 5CPM crossed but present in fact it does not seem to be at all a dynamic floor but a DIY, it's too bad.

Having considered a little more about where this "base value" of 5 comes from, I believe it is likely to be a rounding error in the calculation of the CPM using the FET approach...I wonder if using different FET times might lead to different rounding errors and therefore a different "base value?" In this case, with a FET longer than 3 seconds we would likely see a REDUCED "base value" if this were the case. |

| WigglePig |

Posted - 01/15/2021 : 06:33:03

And herein lies the problem; if you extrapolate based on a small set of samples you WILL get a large uncertainty. If the set of values is near-constant then the overall uncertainty is not huge but is still there. If that set of values has significant variability, like "mostly zero CPS with a few 1s and 2s" then the results you will get for a small set extrapolated (FET is short) to CPM will be wildly variable and largely useless.

In short, if the count rate is near constant then short FET *might* give reasonably smooth extrapolated CPM values but this is unlikely. Where the data is sparse and "bursty" the extrapolated CPM values will be wildly variable and useless...

I dislike the FET process for two reasons:

First, the variability described above.

Second, the use of a rolling calculation of CPM "over the past 60 seconds" where reporting one figure *each minute* would be a more reliable approach. |

| Damien68 |

Posted - 01/15/2021 : 04:55:58

I wish could say it's the same, but I have dificulty.

F*CK! it looks like a bug

where is the good, certainly the left? or what is the radiation exposition level?

20CPM for tube 2 it would be wise to leave

and there is still a floor at 5CPM crossed but present in fact it does not seem to be at all a dynamic floor but a DIY, it's too bad.

|

| ullix |

Posted - 01/15/2021 : 04:49:48

I ran the GMC-500+ overnight, and here I show only the output of the counter for the 2nd, the low-sensitivity tube.

Again, these are values as they are produced by the counter firmware and read by the computer. They are NOT calculated by GeigerLog.

The left half is with a "Fast Estimate Time" (FET) setting of 60 seconds - meaning that FET is switched off - and the right half with FET set to the magic "Dynamic" setting.

Does anyone still claim that the two settings give identical results?

|

| Damien68 |

Posted - 01/11/2021 : 06:44:32

Some Textbox fonts are good, some are wrong:

I think their is a problem with some Fonts or font sizes declaration.

if fonts size are defined in millimeters then their real size in pixels will be a function of the dpi of the registered screen, in the case of a 4K monitor the dpi is higher and so final fonts size in pixels is bigger. |

| WigglePig |

Posted - 01/11/2021 : 06:30:20

quote:

Otherwise, can you start GeigerLog in a batch-file which first switches off scaling, and then starts GL, and on exit of GL switches scaling on again?

I can switch scaling on and off when running GeigerLog but this leads to the issue again of not being able to read the text on anything on the desktop or in apps. At present I have a work-around which works whilst I am at my workbench, in that I use and external second display with lower resolution so if I display Geigerlog on there then I can read everything. The issue with the built-in laptop remains though.

All that aside, the 500+ is pretty good (it has only alarmed "High" once at work so far and the GeigerLog software does lots of useful things, with the statistics tools being rather good (I also have a degree in mathematics as well as being an {RF} engineer.) |

| ullix |

Posted - 01/11/2021 : 04:43:20

@WigglePig: I looked at your log, and can't find anything wrong (as an aside, consider adapting the geigerlog.cfg to your situation - do you presently use audio? set the USB-serial ports).

Chances are, your scaling creates the problem. Obvious question: what happens if you reset it to 100% (or whatever the OFF-setting).

Python's PyQt5 has provisions for scaling, but they still have side effects. But the fact that your other software becomes too small to read suggests that all this other software also cannot handle a hires screen adequately!

There is one thing that is easy to try, though I doubt it helps: start GeigerLog in a different style. You have styles 'windowsvista', 'Windows', 'Fusion' on your system, with the 1st one currently active. Try the other two with (capitalization important!):

geigerlog -dvwR -s Windows

geigerlog -dvwR -s Fusion

Otherwise, can you start GeigerLog in a batch-file which first switches off scaling, and then starts GL, and on exit of GL switches scaling on again?

|

| WigglePig |

Posted - 01/11/2021 : 03:04:43

quote:

Originally posted by Damien68

@WigglePig, what is your screen size in pixel?

the problem can be a conflict if for example the size of the controls are defined in pixels and the size of the fonts in millimeters.

3840*2160 but you have just made me think about something...I use the display scaling set to 200% because at the native resolution any text is too small to read. I would imagine this is where the issue comes from. |

| Damien68 |

Posted - 01/11/2021 : 02:55:08

@WigglePig, what is your screen size in pixel?

the problem can be a conflict if for example the size of the controls are defined in pixels and the size of the fonts in millimeters.

|

| WigglePig |

Posted - 01/11/2021 : 02:37:59

Sadly I cannot send you things on Sourceforge but I have a file for you...

|

| ullix |

Posted - 01/11/2021 : 02:15:10

Good Lord, you call this " a small problem"? I have never seen such before, I think this is horrible. I guess it is something about available fonts on your system. Would you mind helping me in figuring this out? Then, please, start GeigerLog with this command:

geigerlog -dvwR

and publish or send me (e.g. via Sourceforge) the resulting file 'geigerlog.stdlog'.

Re your data: do not forget to set the counter's "Fast Estimate Time" to 60 seconds, or you will get nonsense CPM* data!

The summing of the counts from the 1st and 2nd tube and presenting as CPM and CPS data is a different kind of nonsense, which has been discussed broadly already 2 years ago: http://www.gqelectronicsllc.com/forum/topic.asp?TOPIC_ID=5369

Much was found out back then, enjoy the reading!

Replacing the tube(s) with a different one is possible. I have used - among a few more others - a SBT11A (alpha sensitive) on a GMC300E+ and a 500+. You must observe some things, or render your data useless: use very short leads (<30cm) from your counter to the new tube. If you can't do that, understand the issue of capacitance of the leads, and the need to relocate the anode resistor to physically very close to the new tube.

More discussion here: http://www.gqelectronicsllc.com/forum/topic.asp?TOPIC_ID=4598

(And don't forget to set the anode voltage appropriately). Good luck!

|

| WigglePig |

Posted - 01/11/2021 : 01:30:36

Morning all!

So, I had bought a 320Plus but experienced a display problem with that unit so, after a discussion with Tech Support, it was returned and I now have a 500Plus, which is rather lovely. :-)

After a bit of a faff, I have managed to get GeigerLog working (small problem with button label sizes, see attached image...) and I shall collect some background data to have a play around with the analysis stats that GeigerLog can generate; it looks great too!

One question I have is whether anyone has (a) sorted out the strange treatment of the count values for the two tubes (adding them seems odd) and (b) has anyone replaced one of the tubes with an alpha-capable tube at all?

Tra!

Piggly

Apologies for the HUGE size of the original image...now reduced I hope! |

| Damien68 |

Posted - 01/06/2021 : 06:17:00

Another inconsistency in your way of doing things is that:

starting with CPS you get the CPM distribution you have.

If you started from half CPS (count over 0.5s) updated every half second, you would get a CPM distribution certainly still poissonian but 2 times higher. |

| Damien68 |

Posted - 01/06/2021 : 05:41:17

a CPM value must be the count of events collected during 1 minute, let's call this minute T1.

a second CPM value must be the count of events collected during another minute, let's call this minute T2

there should not be any superimpositions between T1 and T2 otherwise there are events that will be counted several times, in your case 60 times.

it's not clear to me if we can do that in respect to poissonian theory and anyway I don't understand the relevance of doing like this.

|

| Damien68 |

Posted - 01/06/2021 : 04:14:11

if it only works with 60 seconds it is simply due to the following fact:

- to convert CPS to CPM over 60 seconds we just have to add the CPS

CPM (i) = CPS (i-0) + CPS (i-1) + ... + CPS (i-59)

- to convert CPS to CPM over n seconds:

CPM (i) = A x [CPS (i-0) + CPS (i-1) + ... + CPS (i- (n-1))]

with A = 60 / n is a factor to align the result in CPM

If n = 60 => A = 1

If n != 60 => A != 1

It's this factor 'A' which is responsible for the fact that in the end we are no longer in the fish criteria: 'Average = Variance'

if Average = Variance in CPS domain, after CPM transformation we obtain: Average = Variance/A (easy to demonstrate)

So no longer poissonnian if (A != 1) <=> (n != 60)

It's not so simple There are also other factors (see below) but it is already redibitory for n !=60.

but this is not at all a problem, we have primary data in CPS to do statistical analyzes.

CPMs are not primary data and are not there for statistical analyzes.

PS: With n=60, what you considere is not a primary Poisson distribution but a sum of 60 primary Poisson distributions which apparently remains poissonian, but it is not at all in the rules of art.

You can check it:

With n = 60, if you add all the events registered in your CPM histogram (calculate the sum over the set of bars of (bar value x bar index)) you will get the total of events received during test periode multiplied by 60, normaly we get the total of events received during test periode multiplied by 1.

So for me all your CPM histograms are 60 times too high |

| ullix |

Posted - 01/06/2021 : 02:31:41

I just did a test on all settings of the Fast Estimate Time:

It fails at every setting except 60 sec! EVERY!

Did you ever test it? It becomes obvious after less than a minute of runtime. Please, what is the purpose of it, and what is the rather absurd algorithm behind it?

|

| ullix |

Posted - 01/06/2021 : 00:28:12

More "Weirdness", indeed.

With the "Fast Estimate Time" switched from "Dynamic" to "60 seconds" the CPM data of both tubes came out properly in an overnight run.

So, you and GQ knew that this setting creates nonsense, yet you set it even as default, with no warning about the use of it?

What is this anyway, what is the reason for it, and what is the algorithm applied? What is the difference to the other 60... 5 sec and what is their algorithm?

I suppose this is a new setting in the counter configuration memory. And since it is ruining any recording, where can I find it in the configuration and switch it off from within GeigerLog? Could you please publish the new configuration mapping?

The clock remains as bad as it was at a drift of some 20 sec per day, or is there also some secret setting, where the clock can be made to behave well?

This is frustrating for any serious work.

|

| EmfDev |

Posted - 01/04/2021 : 16:49:17

@ullix, did you disable the Dynamic Fast Estimate for calculating the CPM? It comes with Dynamic Fast Estimate as default.

@Damien, you might be right that it is because of the fast estimate for CPM. |

| ullix |

Posted - 01/02/2021 : 01:19:52

@EmfDev, @ZLM:

By now you have had plenty of time to verify the defects that I have shown.

Is a firmware update available, or, if not, when will it be?

|

| Damien68 |

Posted - 12/31/2020 : 12:58:36

by rereading it all, I think we agree on the whole

I'm not so new with Mr Poisson's diagrams, I had seen that at the faculty in physics class, but 30 years ago. so I forgot, but I am restarting at sub atomic speed.  |

| Damien68 |

Posted - 12/31/2020 : 09:58:38

to sum up, the CPMs we have been talking about from the start are not detected CPM but are speculative data at the CPM scale, which is confusing, may be this datas are Poisson compatible with old devices but not with new ones. By turning off the quick estimate, there is a chance that it will become compatible again.

|

| Damien68 |

Posted - 12/31/2020 : 08:49:18

But on some counter you can configure the quick estimate mode, if you can turn it off there is a chance that you will be able to find more consistent data.

but I don't understand the interest of talking about CPM refreshed every second. is better to considere only CPS unless we talk about vortex and the relativity of time

in all this there are only the CPS which are primary data and which meet without ambiguity and without demonstration the Poisson assumptions.

Build CPM by adding 60 CPS values each minutes is Ok too, this remain primary data, and this without ambiguity.

Build an estimator/observer to get each seconds an estimate of what things should be over 1 minutes, we are no longer in primary or observed data, everything remains to be demonstrated and proven depending on the algorithms used, it is not primary datas (mesured CPM) but more speculative data.If it works with the old counter model, it is because the estimation algorithm must be neutral on these models with respect to the statistical interpretation made thereafter, but it is not compulsory.

|

| Damien68 |

Posted - 12/31/2020 : 07:53:50

I understood everything correctly but what I am saying is that the CPM on the new meter is not a CPM at all, but is a quick estimate which is not compatible at all. and that the problem comes from there

and certainly In old model, the CPM is certainly a simple mobile sum of last 60 CPS or other thing but is certanly not the same algorithme.

Prouved violation by your raw data on #3

if detection occure (1 CPS), its repercuted on a first CPM by 15 events, on a second CPM by 15 events and on a 3th CPM by 15 events.

So 1 CPS event is translated to many events in pseudo CPM domain.

quote:

Originally posted by ullix

As mentioned it has firmware 1.22. The new, bad one has 2.24. I'd be skeptical of anything 2.x!

welcome to the field of artificial intelligence.

|

| ullix |

Posted - 12/31/2020 : 07:44:28

quote:

So counter CPM don't meet poisson requirement.

I'm sorry, but this is nonsense. I really recommend some studying of a primer on poisson; you should know by now where to find it.

It does not matter whether you do CPS, CPM, Counts-per-Hour, Counts-per-Month, or Counts-per-17.834 seconds - they will ALL be poissonian! What you MUST NOT do is change the time-interval during recording (what the GammaScout counters like to do) and you MUST NOT multiply any values of any of the counts-per-time-interval by any number different from exactly 1.

Think about it: a background CPS with average 0.3 results in an average CPM= 0.3 * 60 = 18. But the record of CPS might consist of 0, 1, 2, and perhaps 3. Multiply these with 60 and you get 0, 60, 120, 180 for the CPM record. So, what happened to the numbers from 0 to 180 missing in this list? They are absent, and will never exist. Such an outcome happening by statistical chance is as likely as the famous herd of monkeys writing Shakespeare! Such a multiplied list is clearly non-poissonian.

It gets even worse when you multiply with non-natural numbers - like a calibration factor. Then you get a list of numbers with decimals, and thus they will never be poissonian, as Poisson is valid solely for natural numbers (plus the zero), and invalid for everything else!

To demonstrate that both a counter and GeigerLog can deliver proper results, I repeated the last experiment in the same setup and analysis, but this time using an old GMC500+ counter with firmware 1.22.

Using the CPS data from variable CPS1st gives a record of text-book quality:

Then looking at the CPM data, either CPM1st created by the counter, or CPM3rd calculated by GeigerLog from the CPS1st data, gives this outcome:

Now the GeigerLog trace (in brown) is exactly on top of the counter trace (in light blue). In order to make both visible I made the blue line much wider and the brown line much thinner to (kind of) show that the brown line is running inside the blue line.

And look at the Poisson histograms: even the distortions in the columns and curves show up identically in both graphs!

This old counter is the one reviewed here: http://www.gqelectronicsllc.com/forum/topic.asp?TOPIC_ID=5369 We had shown that it has a couple of problems. But inability to calculate CPM was NOT a problem at any time.

As mentioned it has firmware 1.22. The new, bad one has 2.24. I'd be skeptical of anything 2.x!

|

| Damien68 |

Posted - 12/31/2020 : 03:51:23

nice, your representation highlight well the difference of the variance between GeigerLog real CPM and GMC CPM

It's clear that the counter CPMs have nothing to do with CPMs. counter CPM must be pulses count during last 3 second multiplied by 20 or something like that. and for that is not pure discret values (1,2,3,4,...) but typicaly much more (20, 40, 60,...) + offset (long term integration) and ratio factor adjustement to normalise the output to be consistent with CPMs.

in addition counter CPM is an autocorrelated signal because 1 pulse have inpacte at least on 3 consecutives CPM values.

So for at least 2 reasons the counter CPM don't meet poisson assumptions.

see 'Assumptions and validity'

https://en.wikipedia.org/wiki/Poisson_distribution

of course, your CPM build by additionning 60 CPS meet assumption and is a real CPM.

I think you can just ignore the counter CPMs and replace them with the ones from GeigerLog.

anyway there is no interesting statistic informations in the GQ-CPM, it's just the ones who are displayed in real time by the counter display, this information can be highly relevant to be displayed in real time but has no direct other uses.

It could still allow to detect fast transients with a response time of the order of 3 seconds, but we can do the same treatment on the CPS data typically with a 3 second mobile average made on the CPS of course.

|

| ullix |

Posted - 12/31/2020 : 01:02:30

So far it looks like this GMC500+ counter is producing the CPS values correctly, but the CPM values are completely messed up. I looked into that in more depth.

I am using the CPS signal from the first, the high-sensitivity tube, in GeigerLog recorded as CPM1st. This typically had been a M4011 tube, but it seems there is a different one in this GMC500+ counter: I read the label as M01-22, though it is a bit difficult to decipher without opening the case.

The CPS recording and its Poisson histogram are shown in the next picture.

It is not perfect, but very reasonable. These CPS data look ok to me.

The CPM data are nothing but the sum of the last 60 seconds of CPS data, and this is easy to program. So I customized GeigerLog to take the CPS1st counts and create CPM values recorded as variable CPM3rd. (This is not in the current 1.0 release of GeigerLog, but if anyone is interested, I'll leave it in for the next release.)

The next pic shows the CPS1st data as the bottom trace in magenta, the CPM1st data - the ones created by the firmware of the GMC500+ counter - in light-blue, and the CPM3rd data - the ones calculated by GeigerLog from the CPS1st data - in dark.brown.

It doesn't need sophisticated math to see that there is a huge difference between the two CPM traces, but I am showing the Poisson histograms for the two traces anyway. The counter's CPM1st data are not even close to a Poisson distribution!

Without any doubt, the GMC500+ counter is producing nonsense CPM values. And my guess is, any counter using firmware 2.24 (and other?) will be producing the same nonsense.

How can one possibly mess up the calculation of the sum of 60 values, whatever the computer or the programming language?

|

| Damien68 |

Posted - 12/30/2020 : 00:00:51

the phenomena highlighted in red are typically aliasing problems

They are due to the fact that at least one following variable is not cadenced synchronously with the others.

as presented we have 4 variables:

- GMC internal Time.

- PC time (used as reference).

- GMC reading RTC trigger (precise moment when the GMC will read the time from its clock).

- PC reading Time trigger (precise moment when the PC will read his time).

there is a lot of reasons/possibilitys to do this phenomenum

this aliasing highlights jitter between some of this 4 variables.

Jitter is a random components on the instant of an cyclical event.

we already know that we have 2 variables which are not synchronous, it is the PC clock and the GMC clock, but the other variables are also important

this jitter is not necessarily very important because we are in the presence of 2 very close frequencies (RTC clock and PC clock) and therefore phase shifts between the two (RTC vs PC) evolving continuously and very slowly. also the phase shift between this two clocks regularly becomes very low and near zero and increase.

So there is critical moments (considering trigger and clocks) where therefore we have apparent instability effects even with small jitter, but is normal.

If we loose 17s each 14 hours the phase shift between the two clocks make a cycle (360°) each 49 minutes

also we should observe a maximum aliliasing effect every 49 minutes. This is purely theoretical, there may be other factors.

Anyway, This change nothing about drift issue.

|

| Damien68 |

Posted - 12/29/2020 : 03:16:51

it is possible that my hardware is an old version which could be revised since.

For that it would have been nice to see if there is still quartz on the microcontroller on your device. the majority of microcontrollers can also operate with a clock embedded in the microcontroller not very precise (RC type)

on my hardware version there is an external RTC with backup powering, but often we only read it at boot time to initialize a second internal CPU clock, this to avoid asynchronous problems. |

| Damien68 |

Posted - 12/29/2020 : 01:27:15

I have firmware rev 2.18

Hardware v 5.2

to do my test I use elaspsed timer available in text mode display

over 20 hours I don't have any perceptible shift with my PC clock. if there is one it is less than 1/2 second.

that's only says that there is at least one good clock in my counter. after the CPS sampling aren't necessarily triggered by this same clock

to deduce more, it would be necessary to know the architecture of the software |

| ullix |

Posted - 12/29/2020 : 00:35:11

Which firmware version has your counter?

No, I won't open the counter. I will return it as a defect device.

The quietness of GQ to this topic speaks volumes. This counter is due for a recall. I guess we won't see it. People will be using this piece of crap, and spreading nonsense data all over the world.

|

| Damien68 |

Posted - 12/28/2020 : 07:24:44

I started a test with my GMC-500 +, it's been 2 hours, it's a bit short of course, but I'll see over time.

at the moment I have no observable clock drift.

can you check the presence of C15, C19 and X1?

C15 and C19 are the load adjustment capacitance for quartz

a deviation of 1 milliseconds per year represents a drift of 3.18 x 10E-11

On ESP32 (wifi device) standard Quartz to meet Wifi specifications must be around 10 ppm precision

1 ppm is 1 part-per-million so is 1 x 10E-6

so standard ESP32 quartz precision must be around 10 x 10E-6

so this give max shift of 864 mS over 1 day.

we can achieve precision of the order of 10E-11 = 0.01 ppb (part per billion) with small rubidium atomic clock that are use for example in cell tower:

but is between 2.000 to 8.000 USD.

It work like a standard Atomic clock but with only one corp.

It is a microwave generator with his frequency drived by a loop to keep is frequency in the area where it is most absorbed by rubidium.

it's not very precise for an atomic clock, but it's surprising for a small component like this.

|

| ullix |

Posted - 12/28/2020 : 02:15:42

I am confused by the GMC500+ clock being off by some 20 sec per day. This is huge!

It is easy to record the time difference between the GMC counter time and the real time: in the geigerlog.cfg configuration file simply activate the variable X for GMC devices. You then get the time difference computer-time minus device-time in seconds under the variable X in the log record.

The computer time has a resolution of 1 microsecond, and is kept synchronized with an NTP time server.

I did the recording and here is the result:

There is a continuous and consistent diversion of device time from real time. (The steps have to be 1 sec, as this is the best resolution of the GMC counter clock). On top of this, every now and then the device time flips back and forth inconsistently, see inset.

What on earth was done in the electronics and/or the firmware to create a clock as bad as this one?

Just for some reference: I am using an ESP32 microprocessor, and its clock deviation is measured in MILLISECONDS per YEAR! |

| Damien68 |

Posted - 12/26/2020 : 08:30:45

it's not very easy to calculate Mr. Poisson's laws with large values, how to calculate a factorial 770?

certainly you do this with simplifications after aggregating the data. |

| Damien68 |

Posted - 12/26/2020 : 07:32:14

All response is in your tabular data of 2nd tube.

Looking your tabular data of 2nd tube, it is clearly seen that the CPS is true and it must match the CPS realy measured on the tube.

on the other hand, in this datas, the CPM is clearly an estimate build by a algorithm, which I think explains everything.

for tube 1 we must have exactly the same type of filtering.

On the FFT, the pronounced dip at 3 seconds would correspond has an internal convolution made by the algorithm, we can see this convolution/integration effect (over 3 seconds) on your tube2 CPM raw data. each primary CPS detection have an effect on 3 consecutives CPM value. (your FFT algo is very good).

That would also explain the excess on the variance on the CPM datas for many reasons and we can't consider these CPM data as uncorrelated data because they are correlated.

to have the real CPMs, they must be available in the flash memory of the GMC and only 1 per minutes not 60 (if you set recording to CPM not to CPS). or you can also sum CPS by pack of 60.

the CPM values you use are intended to be displayed on the screen or to be used as is but not in this way

But it's not clear to me to want to do statistics on CPMs that we receive every second.

Blue auto-correlation lag comes from the same convolution (is logic).

PS: filter looks like as a mobile average over 3 seconds but it can be more complex, and it's not necessarily linear (there can be several estimation modes and triggers).

on CPM2nd data,

the 5CPM offset can be a long term integration part:

to make a compromise between reactivity and stability, maybe the response dynamic is split into two parts,

a quick part based on the last 3 seconds

+ a longer term part.

knowing that the whole can be reconstructed on the actuation of a trigger

|

| Damien68 |

Posted - 12/26/2020 : 07:04:15

Okkkkkkkkkk

you are right, I did not know precisely the laws of Mr. Poisson, which applies perfectly and be reference to the 100% random events contrary to the normal law.

and if I apply Standard Deviation = Square root of average with normal distribution algorithm, I find consistent results with your poisson curve.

Sorry for my mistake. it's stupid it's elementary.

|

| ullix |

Posted - 12/26/2020 : 02:36:03

For anyone looking for some basic primer on Poisson statistics see my article Potty Training... on pages 8-11: https://sourceforge.net/projects/geigerlog/files/Articles/GeigerLog-Potty%20Training%20for%20Your%20Geiger%20Counter-v1.0.pdf/download

For a lot more in-depth, see Wikipedia.

Of particular relevance in a Poisson distribution is this property:

Average=Variance, or: Standard Deviation = Square root of average.

Note that the this implies that Standard Deviation of a Poisson distribution is NOT a free parameter; this is one major difference to a Normal Distribution!

---

I continued the recording and the outcome did not change from first post. But interesting results from some more analysis.

Applying the FFT to the CPM data gives this picture:

The upper and lower right graphs show a pronounced dip at a time period of 3 sec (frequency 1/3 Hz); lower left shows an auto-correlation lag (blue line) also of up to 3 sec. There should not be anything 3 seconds in any of these graphs! Instead there should be a structure as shown and explained in the GeigerLog manual, pages 35-36 https://sourceforge.net/projects/geigerlog/files/GeigerLog-Manual-v1.0.pdf/download

The GMC 500+ Date&Time is too fast by now 59 sec over almost 2 days. That is a lot! The timer seems broken. I am still wondering about any causal relationship between these observations?

Looking at the counts of the 2nd tube (the low sensitivity tube) gives further insight into this messed-up situation:

in Black is CPS, in Red is CPM. CPM is steady at a minimum of 5, while it should drop down to zero. When CPS registers one single count, the CPM should increase by 1, but it increases by 20! And this count increase should last for 60 sec in the CPM track, but instead it drops back to zero (actually to 5) already in the next second. And that is not even consistent, as it sometimes drops by 5, sometimes by 6.

Here the tabular data for easy inspection:

This is wrong in so may ways, I am speechless.

Wonder what ZLM and Emfdev have to say?

|

| Damien68 |

Posted - 12/25/2020 : 02:12:01

I'm not sure how you take your CPM values, but if you pull live ACPM value through the UART (USB), it's not a CPM in the strict sense but depending on the algorithm it might be an estimate based on algorithms to improve reactivity, in any case, mathematically, we can't be sure to talk about CPM. which could explain slight distortions (inconsistencies) between CPS and CPM statistics.

I also have the impression that during the 12 hours of testing you must have moved something a little bit, in the CPS statistics we see 2 closed modes (peak flattening), it is amplified on the CPM histogram, but that can also come from a random effect. |

| Damien68 |

Posted - 12/24/2020 : 06:39:34

Hi ullix,

I don't understand your first Histogram with CPM data,

apparently the two diagrams come from the same measurements made over 41651 samples (with 1 sample/sec) so during 11.57 hours.

To build CPM histogram, we should group the CPS data in packets of 60 by adding them. we should therefore end up with statistics over 694 values of CPM, but on your CPM histogram we remain with same overall count of 41651 events (samples).

Out of curiosity I plotted the Gaussian corresponding to the following data:

avg= 770

sd= 13626^1/2 = 117

with this following link: https://www.medcalc.org/manual/normal_distribution_functions.php

I then get the following curve:

this gives the normalized Gaussian, so you have to multiply it by the total number of samples (41651) then also by 33 because in your histogram to limit the number of bars in the graph, the events are grouped in packs of 33 consecutives value (ex: 0 to 32; 33 to 65; 66 to...) so each bar value is a aggregation (sum) of 33 discret values.

By doing this we find a good consistency with the data in blue of your first histogram and with a peak (maximum) of 4673 events (see the graph above), so I think there is a bug in your algorithm for plotting the reference gaussian.

PS: multiply by 33 is a approximation fairly reliable but we can be more precise by calculating for each bar intervels the sum of normalized gaussian value of each aggregated discrete values.

After, you just having to multiply each sum value by the overall events count(in this case 41651) and plot this as reference.

If the clock shifts by 20 seconds in 12 hours, this makes a drift of 0.046% or 462ppm

462 ppm is out of standard for a quartz, for reference: basic standard maximum drift for Real Time Clock is around 30ppm (ie. 2.5 seconds per days). But it may be a problem of interpretation, or not...

|

|

|